what does it mean to say something is statistically significant

Statistical significance is the probability of finding a given divergence from the zilch hypothesis -or a more extreme one- in a sample. Statistical significance is often referred to every bit the p-value (brusk for "probability value") or simply p in research papers.

A small p-value basically means that your information are unlikely under some null hypothesis. A somewhat arbitrary convention is to reject the null hypothesis if p < 0.05.

Example 1 - 10 Coin Flips

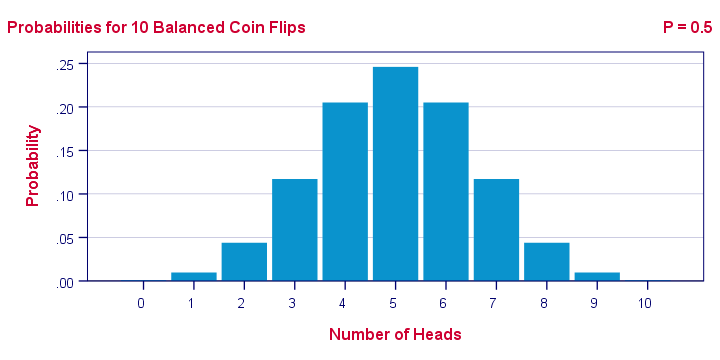

I've a coin and my zilch hypothesis is that information technology's balanced - which means information technology has a 0.5 chance of landing heads up. I flip my money x times, which may result in 0 through x heads landing up. The probabilities for these outcomes -assuming my coin is actually balanced- are shown below.

Keep in listen that probabilities are relative frequencies. So the 0.24 probability of finding five heads ways that if I'd depict a ane,000 samples of 10 money flips, some 24% of those samples should consequence in v heads up.

Now, 9 of my 10 coin flips actually country heads upwards. The previous figure says that the probability of finding nine or more than heads in a sample of x coin flips, p = 0.01. If my coin is really counterbalanced, the probability is only one in 100 of finding what I just institute.

So, based on my sample of N = 10 money flips, I reject the null hypothesis: I no longer believe that my coin was balanced afterwards all.

Instance two - T-Test

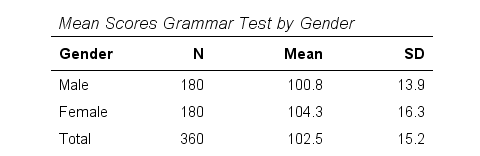

A sample of 360 people took a grammar examination. We'd like to know if male person respondents score differently than female person respondents. Our zip hypothesis is that on average, male respondents score the aforementioned number of points as female respondents. The table below summarizes the means and standard deviations for this sample.

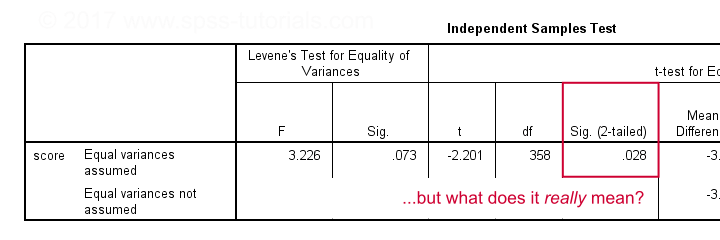

Note that females scored 3.5 points higher than males in this sample. Withal, samples typically differ somewhat from populations. The question is: if the mean scores for all males and all females are equal, then what's the probability of finding this mean difference or a more extreme one in a sample of N = 360? This question is answered by running an independent samples t-test.

Test Statistic - T

And so what sample hateful differences can nosotros reasonably wait ? Well, this depends on

- the standard deviations and

- the sample sizes we have.

We therefore standardize our mean difference of 3.five points, resulting in t = -two.two So this t-value -our exam statistic- is only the sample mean difference corrected for sample sizes and standard deviations. Interestingly, we know the sampling distribution -and hence the probability- for t.

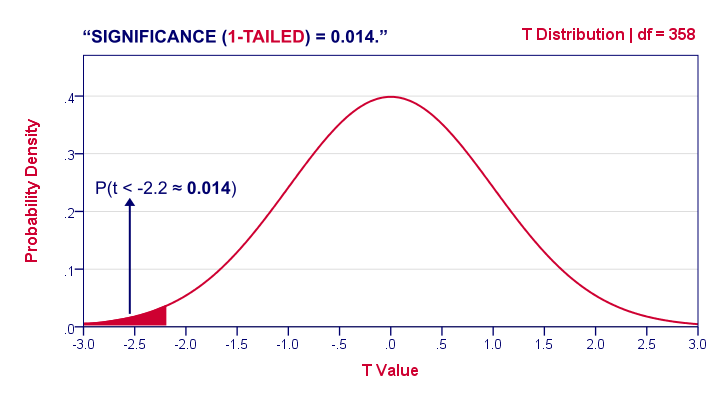

1-Tailed Statistical Significance

1-tailed statistical significance is the probability of finding a given deviation from the null hypothesis -or a larger one- in a sample. In our example, p (ane-tailed) ≈ 0.014. The probability of finding t ≤ -2.2 -corresponding to our mean difference of 3.5 points- is one.4%. If the population means are really equal and we'd draw 1,000 samples, we'd expect only 14 samples to come up upward with a hateful difference of 3.v points or larger.

In short, this sample outcome is very unlikely if the population hateful difference is zero. We therefore turn down the cypher hypothesis. Conclusion: men and women probably don't score equally on our test.

Some scientists volition report precisely these results. However, a flaw here is that our reasoning suggests that nosotros'd retain our null hypothesis if t is large rather than pocket-sized. A big t-value ends up in the correct tail of our distribution. Nevertheless, our p-value only takes into business relationship the left tail in which our (small) t-value of -2.2 concluded upwardly. If we take into business relationship both possibilities, we should report p = 0.028, the 2-tailed significance.

2-Tailed Statistical Significance

ii-tailed statistical significance is the probability of finding a given accented difference from the zippo hypothesis -or a larger one- in a sample. For a t examination, very minor likewise as very big t-values are unlikely nether H0. Therefore, we shouldn't ignore the right tail of the distribution like we do when reporting a 1-tailed p-value. It suggests that we wouldn't reject the cypher hypothesis if t had been 2.2 instead of -2.2. However, both t-values are as unlikely under H0.

A convention is to compute p for t = -ii.ii and the opposite result: t = ii.2. Calculation them results in our ii-tailed p-value: p (ii-tailed) = 0.028 in our instance. Considering the distribution is symmetrical effectually 0, these ii p-values are equal. So nosotros may just equally well double our one-tailed p-value.

1-Tailed or 2-Tailed Significance?

So should y'all report the 1-tailed or 2-tailed significance? First off, many statistical tests -such as ANOVA and chi-square tests- only result in a i-tailed p-value so that's what you'll report. Still, the question does apply to t-tests, z-tests and some others.

At that place's no total consensus among data analysts which approach is better. I personally ever report 2-tailed p-values whenever available. A major reason is that when some test only yields a 1-tailed p-value, this oft includes furnishings in different directions.

"What on earth is he tryi...?" That needs some explanation, correct?

T-Test or ANOVA?

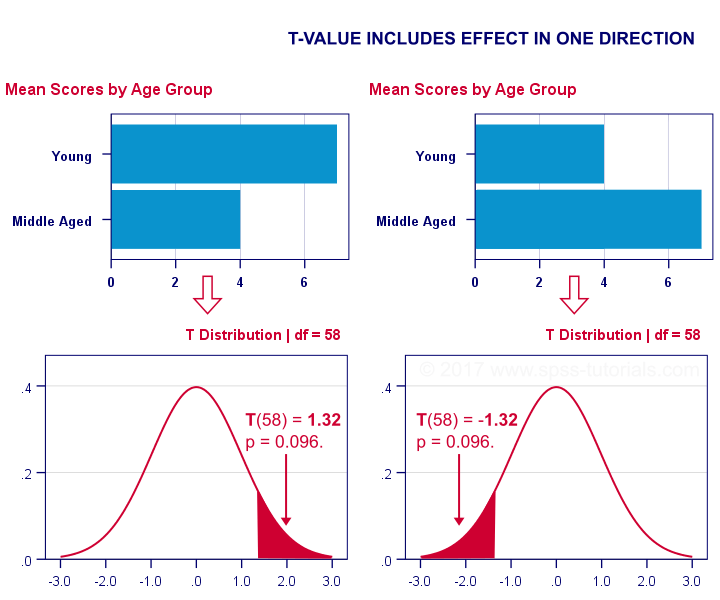

Nosotros compared immature to middle anile people on a grammar examination using a t-test. Let'due south say immature people did better. This resulted in a 1-tailed significance of 0.096. This p-value does not include the contrary upshot of the aforementioned magnitude: middle aged people doing meliorate by the same number of points. The effigy below illustrates these scenarios.

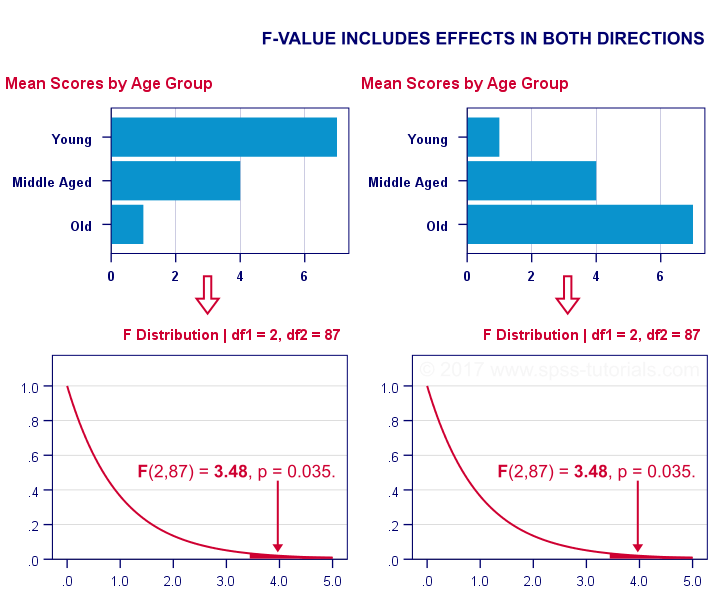

We then compared young, middle aged and erstwhile people using ANOVA. Young people performed all-time, old people performed worst and middle aged people are exactly in between. This resulted in a one-tailed significance of 0.035. Now this p-value does include the opposite upshot of the same magnitude.

Now, if p for ANOVA ever includes furnishings in different directions, then why would you lot not include these when reporting a t-test? In fact, the independent samples t-examination is technically a special case of ANOVA: if you run ANOVA on two groups, the resulting p-value will be identical to the 2-tailed significance from a t-test on the same data. The aforementioned principle applies to the z-test versus the chi-foursquare exam.

The "Alternative Hypothesis"

Reporting 1-tailed significance is sometimes dedicated by claiming that the researcher is expecting an effect in a given direction. However, I cannot verify that. Peradventure such "culling hypotheses" were just fabricated upwards in order to render results more than statistically significant.

2nd, expectations don't rule out possibilities. If somebody is admittedly sure that some outcome will have some direction, and then why employ a statistical exam in the outset place?

Statistical Versus Practical Significance

So what does "statistical significance" really tell us? Well, information technology basically says that some result is very probably non goose egg in some population. So is that what we really want to know? That a hateful difference, correlation or other effect is "not naught"?

No. Of grade not.

Nosotros really desire to know how large some mean difference, correlation or other effect is. However, that's not what statistical significance tells u.s.a..

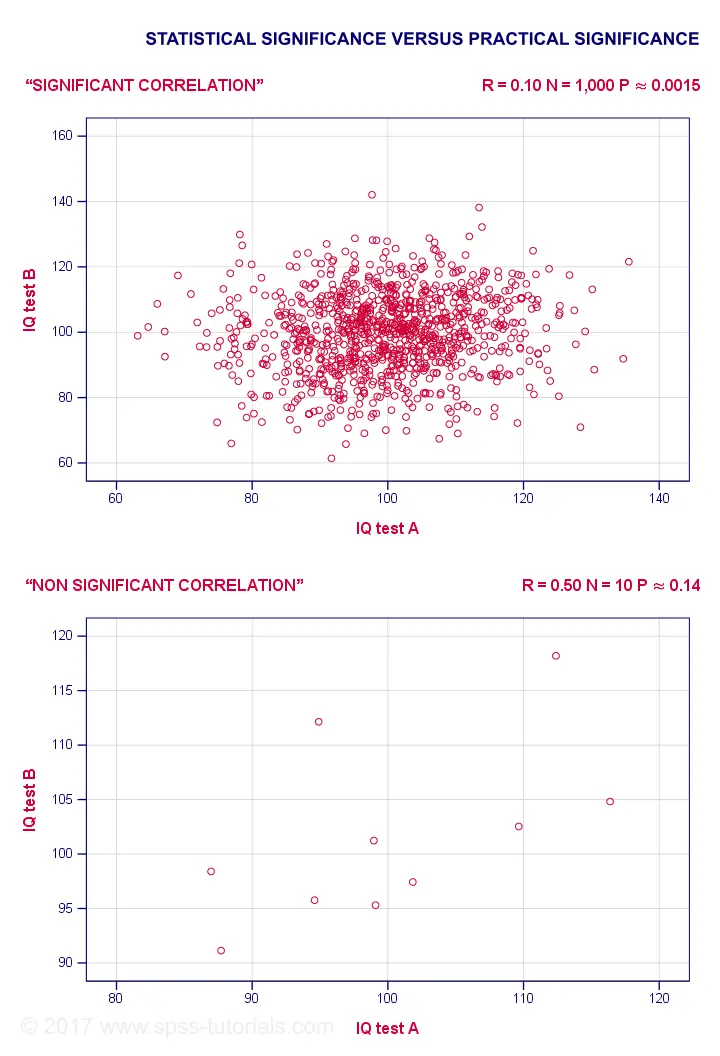

For example, a correlation of 0.i in a sample of N = 1,000 has p ≈ 0.0015. This is highly statistically significant: the population correlation is very probably non 0.000... Still, a 0.one correlation is not distinguishable from 0 in a scatterplot. And so it'southward probably not practically meaning.

Reversely, a 0.v correlation with North = 10 has p ≈ 0.14 and hence is not statistically significant. Nevertheless, a scatterplot shows a strong relation between our variables. However, since our sample size is very small, this strong relation may very well be limited to our small sample: it has a 14% chance of occurring if our population correlation is really zero.

The basic trouble here is that any effect is statistically meaning if the

sample size is large enough. And therefore, results must have both statistical and practical significance in gild to deport any importance. Confidence intervals nicely combine these two pieces of information and can thus be argued to be more useful than merely statistical significance.

Thank you for reading!

Source: https://www.spss-tutorials.com/statistical-significance/

0 Response to "what does it mean to say something is statistically significant"

Post a Comment